Amazon revealed on Wednesday that it is building AI-powered smart glasses designed to make deliveries faster and easier for its drivers. The company wants to give drivers a hands-free tool that lets them focus on their work without constantly switching between their phones, packages, and surroundings.

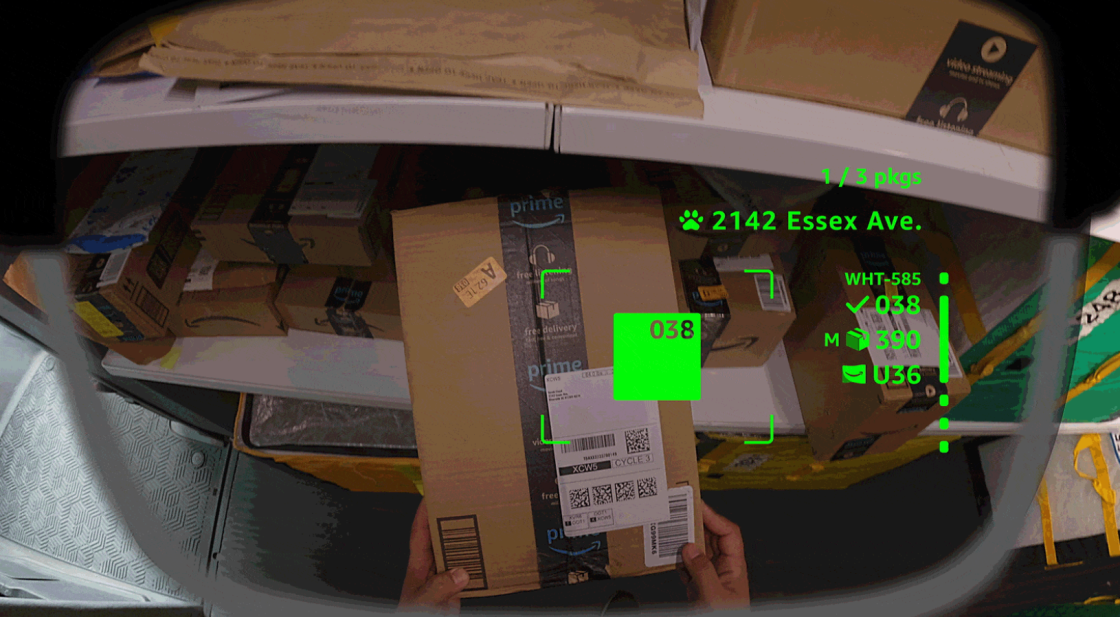

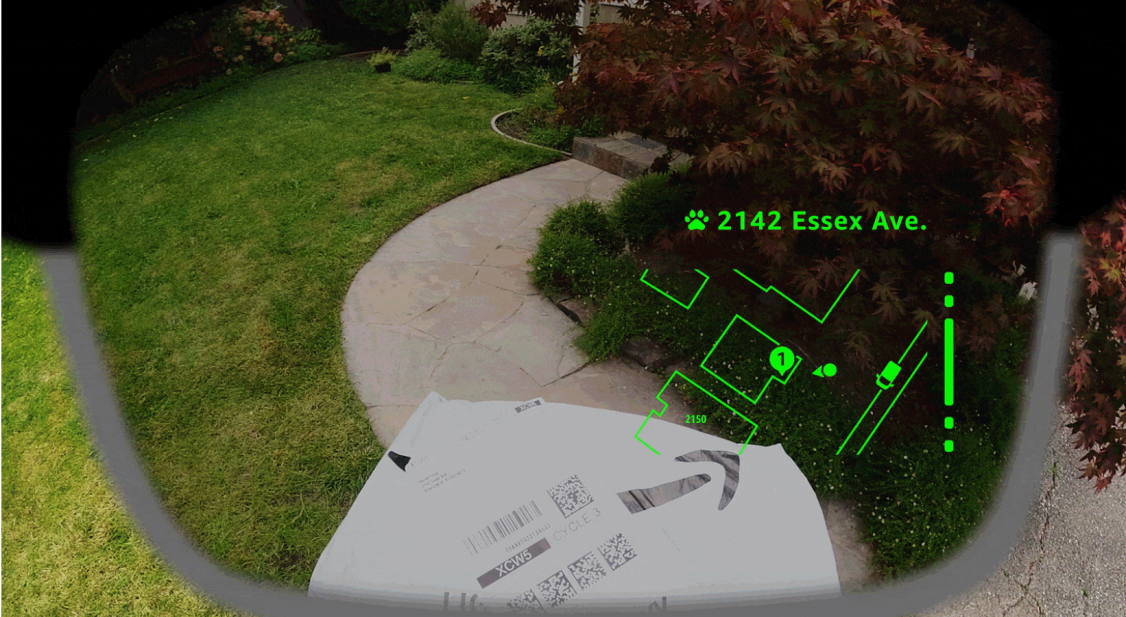

According to information gathered by TechMarge, the new glasses will let drivers scan packages, follow walking directions, and capture proof of delivery — all without touching their phones. The device uses artificial intelligence, computer vision, and small cameras to create an interactive display that highlights delivery tasks and alerts drivers to possible hazards along their routes.

Amazon aims to save time on every delivery by showing critical information directly in front of a driver’s eyes. When a driver parks at a delivery stop, the glasses automatically activate and guide them to locate the right package in the vehicle. After that, the device provides simple, visual directions to the delivery address. The technology also helps drivers navigate complex areas such as apartment buildings, gated communities, and business centers where multiple delivery points can be confusing.

Each pair of glasses connects to a controller built into the delivery vest. The controller houses the operational buttons, a removable battery, and an emergency alert switch. The glasses can support prescription lenses as well as adaptive lenses that adjust automatically to lighting changes.

Amazon is currently testing the technology with delivery drivers across North America while gathering feedback and improving its performance before expanding the rollout. Reports from TechMarge indicate that Amazon began exploring this concept last year, so the official unveiling didn’t come as a surprise to those following the project’s development.

The company also plans to upgrade the glasses with new capabilities. They will soon detect real-time defects, warning drivers if they leave a package at the wrong address. The system will eventually identify pets in yards and adjust automatically to challenges like dim light or unexpected obstacles.

On the same day, Amazon also introduced “Blue Jay,” a new robotic arm built to assist warehouse workers by picking items from shelves and sorting them efficiently. Alongside that, the company presented an AI tool called Eluna, which analyzes data to improve warehouse operations and streamline logistics.

Amazon continues to push further into AI and automation, combining wearable tech, robotics, and data-driven insights to make its delivery network smarter and more efficient.