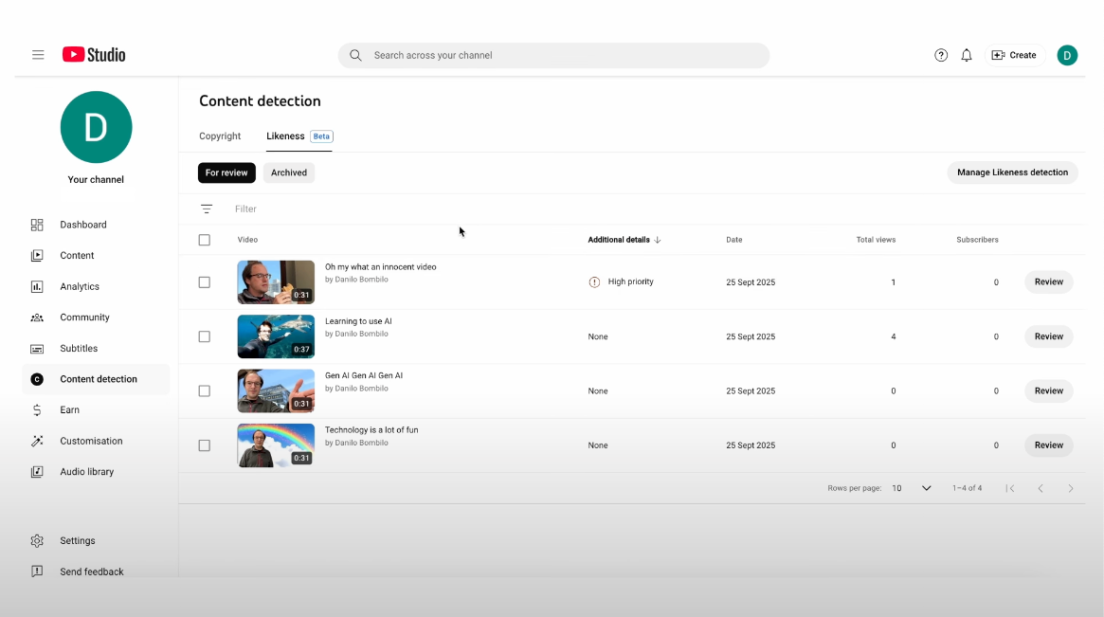

YouTube announced on Tuesday that it has officially launched its likeness-detection technology for creators in the YouTube Partner Program, marking the end of its pilot phase. The new tool gives creators the power to request the removal of AI-generated videos that use their face, voice, or overall likeness without permission.

Through a YouTube spokesperson information got to TechMarge that this first rollout targets eligible creators, who started receiving email notifications earlier in the day. The company said its new detection system helps creators protect their identity and reputation by identifying deepfakes or AI imitations that misuse their likeness. The tool aims to stop people from falsely using creators’ images or voices to endorse products, spread misinformation, or create deceptive content. In recent years, several cases have surfaced, including when the company Elecrow used an AI version of YouTuber Jeff Geerling’s voice to promote its products without his approval.

Through its Creator Insider channel, YouTube explained how the new feature works and how creators can start using it. To set up the tool, creators must visit the “Likeness” tab, agree to the data processing terms, and use their smartphone to scan a QR code displayed on their screen. The QR code leads to a verification page where the creator uploads a photo ID and records a short selfie video for identity confirmation.

After YouTube approves access, the creator can see all videos detected as using their likeness. From there, they can file a removal request under YouTube’s privacy rules or submit a copyright claim if the content violates intellectual property rights. Creators also have the option to archive videos flagged by the system.

Creators remain in full control of the process. They can choose to opt out of the likeness detection feature at any time, and YouTube will stop scanning for related videos within 24 hours of their decision.

YouTube began testing this feature earlier in the year, continuing efforts it started in 2023 when it partnered with the Creative Artists Agency (CAA). That collaboration focused on helping public figures, athletes, and online personalities track down videos that misuse their AI-generated likenesses.

In April, YouTube also voiced its support for the proposed NO FAKES Act, a bill designed to address growing concerns over AI-generated replicas that mimic real people’s voices or appearances to deceive viewers. With the official rollout of its likeness-detection technology, YouTube is taking another major step to protect creators from the misuse of artificial intelligence and to strengthen trust across its platform.